Here is an overview of my private projects

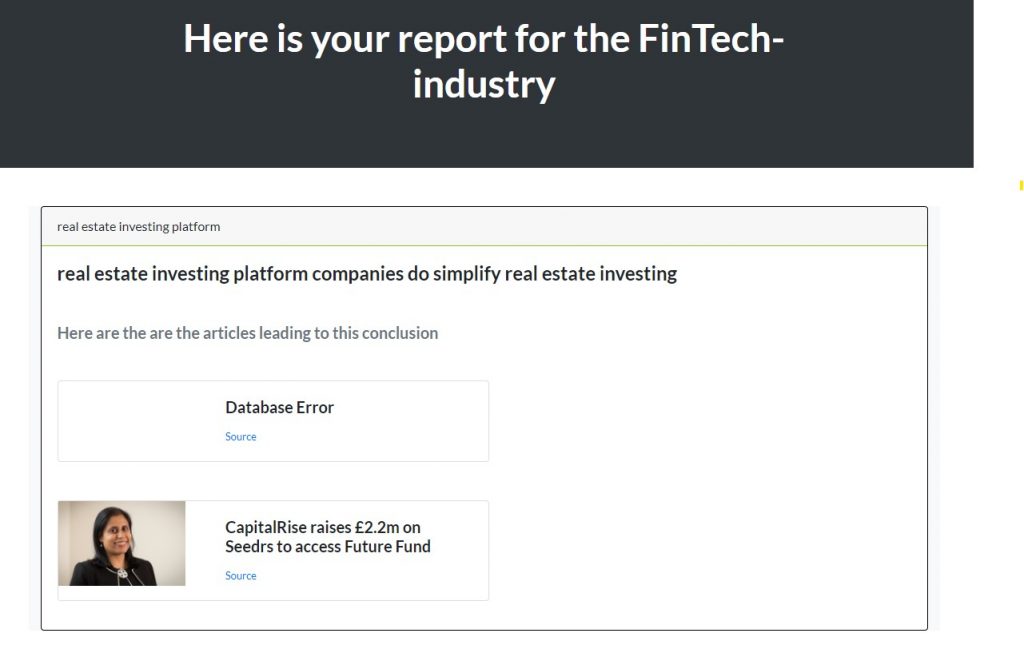

Automatic generation of industry reports from news articles

Link to app. This app analyzes news articles to automatically generate an industry reports. In short, it builds a knowledge graph from news articles by exracting information from articles, enhancing this information with external data, and connecting this information with existing data in the knowledge graph. Finally, it generalizes the information in the knowledge graph to a more abstract level and generates conclusions.

The process in detail

- from an news article the app extracts information about the company: e. g. the company type such as “real estate investing platform”

- Enrich company information: For instance, the parent company, company industry (e. g. FinTech) and company type (e. g. startup)

- From an news article the app extracts information about the article itself: e. g. classifying into article types such as investments

- Store this information in a knowledge graph (using Neo4J)

- Connect the newly inserted information with existing data in the knowledge graph. For instance, connect industry trends with the company type. Example: industry trend “work from home” is connected with a company of type “remote hiring company”

- Generalise the new information: in order to build conclusions for the news report the data in the knowledge graph is connected to more generalized categories. For instance the company activity “empowering employees in their benefit to financial health” is generalized to “HR/Financing functions”

- Use rules to query the knowledge graph and build conclusions like in the screenshot below. Example pattern: <companies of company type> do <company activity> for <target market>

Tech stack

- Python for text analysis

- Django for front- and backend

- Neo4J as the database

- Heroku for hosting

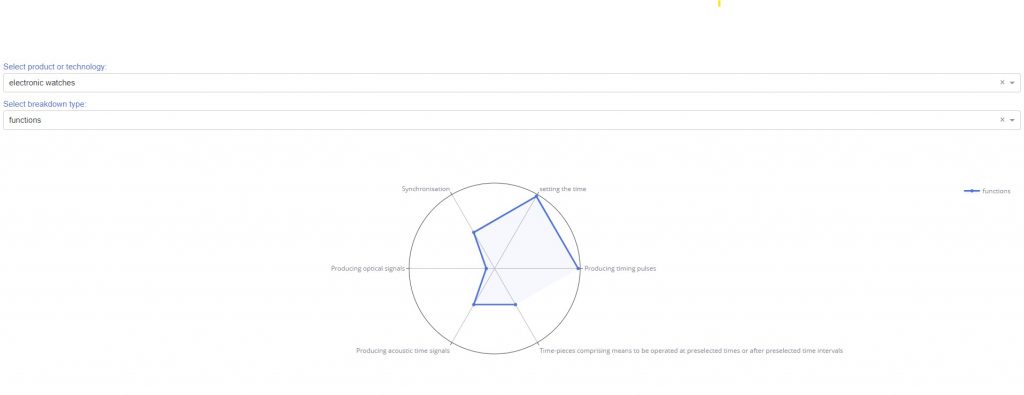

Patent analysis

Link to app. This app analyzes and visualizes patents. It is Python-based* implemenation of several research-paper. Concreteley:

- Performns query exstention for keyword extraction using Wikipedia and other sources

- Extracts applications from patents (i. e. “With the technology in the patent, what can be done? For instance: detect an earthquake”) using SVO/SAOx-analysis based on these papers. I have implemented my own logic for SVO/SAOx-analysis but also used an external library.

- extracts components, functions, and properties from a technology or product

- Preprocessing for patent texts (SVO/SAOx-analysis, normalization etc.)

- Uses the EPO-API to download patents

- Uses the wikipedia-API to parse Wikipedia

- Generates a word cloud for patent keywords

- predict product diffusion based on the Bass diffusion model based on these papers

- classifies technologies according their novelty (emerging technology, declining technologies etc.) based on these papers

- Shows the relationships between a technology’s actions (i. e. verbs) and its products or sub-technologies

- measures the development focus for a technology or product using a technology radar

- extracts components, functions, and properties from a technology or product

- visualizes a technology’s development roadmap

- visualizes technology convergence and connectedness

Tech stack

- Python (primarily Pandas, nltk, and, sklearn, numpy, and Spacy) for text analysis

- MongoDB, Neo4J, and S3 for data storage

- Wikipedia and EPO for getting data

- This libaray for SVO-support

- Plotly Dash for vizualization

- Heroku for hosting

- Wikipedia and EPO for getting data

*the final data was not fully automatically. Some of it was prototyped using Python, some of it was completely manually generated, some a combination of both.

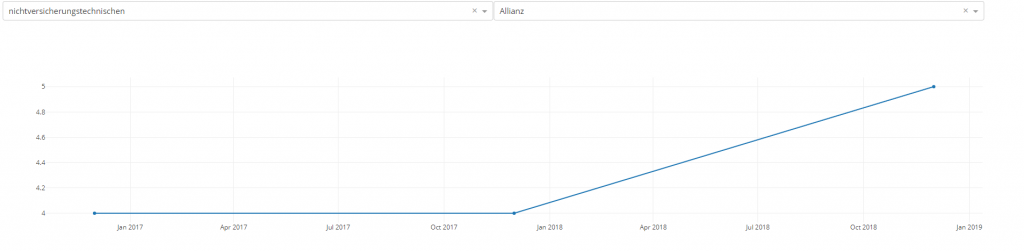

Analyzing industry trends

Link to app. This app analyzes and visualizes industry trends from earning transcripts and news articles. Concreteley:

- visualizes industry topics over time (see screenshot) based on pre-defined industry topics

- it performs outlier-analysis to find interesting industry trends (e. g. trends that have risen sharply in the last couple of months)

- assigns sentiments to articles

Tech stack

- Python (primarily Pandas) for text analysis

- Dash for vizualization

- S3 for Data Storage

- Azure for Sentiment Analysis

- newsapi.ai for obtaining news

- Heroku for hosting

- Kedro as the development framework

Analyzing earning transcripts

Link to app. Here I have built a pre-processing pipeline for analysing earning transcripts. Concretely:

- extract text from PDFs

- generate custom stoppwords

- normalize the earning transcripts

- generate keywords using Tf-idf

- Built a simple Dash (Plolty) app to visualize keywords over time per company (see screenshot below)

Tech stack

- Python: Pandas, Numpy; helpers_data_science and sklearn for textanalysis,

- Plotly’s Dash for visualization

- Heroku for hosting

- PostgresDB and AWS S3 for data storage

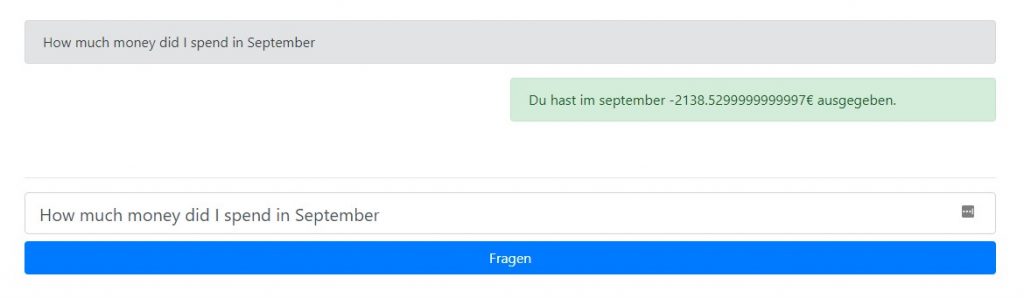

Simple finance chatbot

Link to app. I have built this chatbot to answer simple questions about my finances such as how much money did I spend in September?

The chatbot is “stupid”. It does not use a language model, but is rule-based: I am using spacy to extract the intent from the user input. spacy is also used to extract the month.

Tech stack

- Language: Python

- Django for front- and backend

- spacy for NLP

- Pandas for data processing

- Heroku for hosting

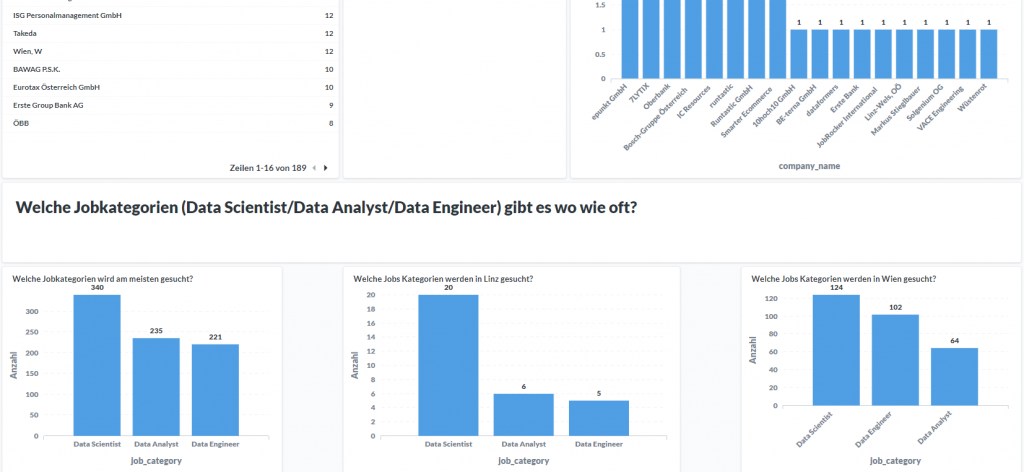

Analysis of job offers

Link to dashboard. I have used Python to analyze job offers. I then visualized the job offers using Metabase.

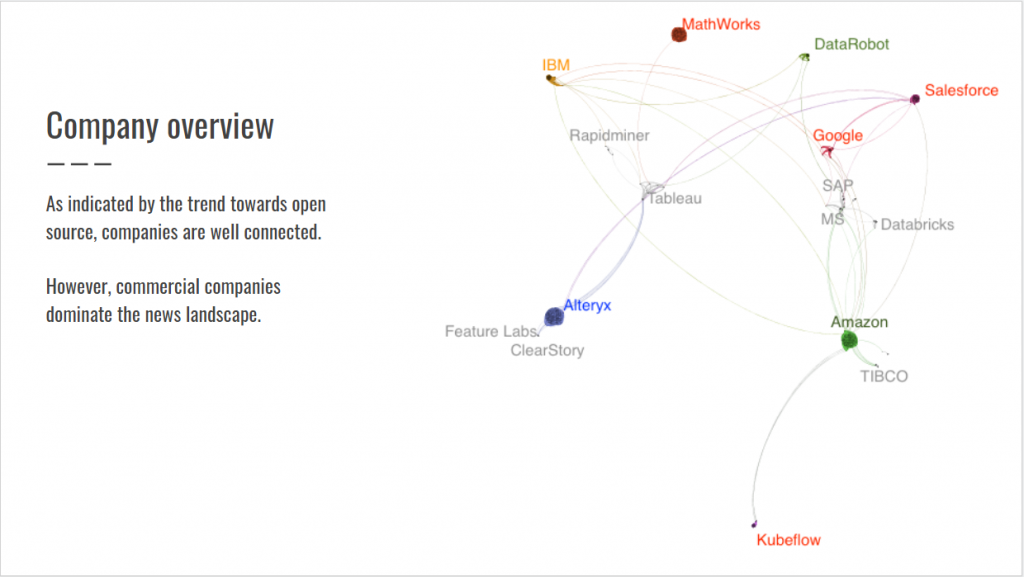

Graph-based analysis of 2000 articles about Machine Learning and Artifical Intelligence

Link to the presentation. I have used Python to analyse 2000 articles about Machine Learning and Artifical Intelligence. I then used Gephi to analyze industry trends.

Python Module for Data Science helpers

Several helps I use for my data science projects. Install via pip or check it out on github.